Poke Interaction>>

Maintain immersive user experience by ensuring adaptive virtual interactions with the real environment

Developed during Summer Internship at UVR Lab at KAIST University

Summary

With Meta Quest Pro and Unity, I recreated a problem I wanted to address in an VR environment, designed two adaptive methods for handling virtual content collisions with the real environment, and conducted a pilot test comparing the solutions.

Timeline

JUL - AUG 2024

The Problem

How can the system overcome challenges with poke selection in complex physical environments due to real-life objects becoming obstacles in certain areas?

Specifically utilizing Meta Quest Pro HMD

Research Method

Commercial Standard

Meta Quest Pro

does not recognize areas or real life objects that is in the way of user interaction with virtual object

transitions from poke selection to ray-casting if the user manually displace their hand out of the “hover area”

Apple Vision Pro

poke interaction is used for virtual keyboard

placement is not controlled by the user

Approach

Utilizing Meta Quest Building Blocks, I represented the problem in the VR environment, designed two methods the system can adapt when it detects the virtual content colliding with real environment, and conducted a pilot testing of an experiment comparing the two solutions.

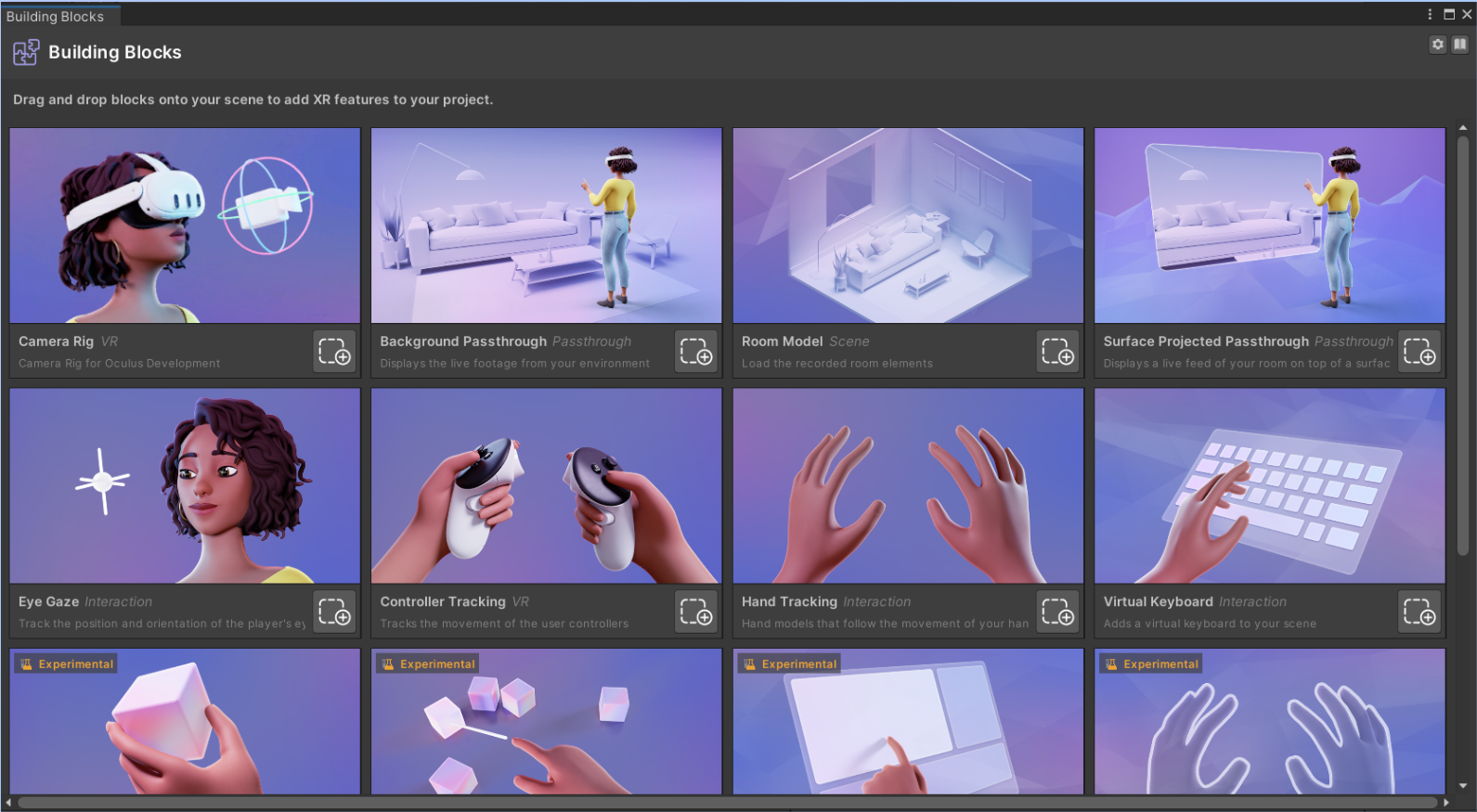

Unity Meta Quest Building Blocks

Poke Interaction Block

DEFAULT

Physical object (pink desk) is an obstacle to the virtual object (screen), limiting selection and user area of interaction

TASK given to both Solutions

“Use your dominant hand's index finger to select the cards in order from smallest to largest”

Solution A - Bounce

The screen bounces off a colliding real object and automatically repositions itself at a reachable location, keeping information and interactive elements together

Solution B - Hover

The screen stays in its original position, but the interactive surface (shown as blue cards) is automatically repositioned to a reachable location, separating the information and interactive elements

Comparisons

TriPad: Touch Input in AR on Ordinary Surfaces with Hand Tracking Only [CHI ’24]

Horizontal Plane Haptic Redirection: Realizing Haptic Feedback for the Virtual Inclined Plane in VR [IEEE ‘24]

Although both papers focused more on haptic feedback provided by matching the real-world surface with the virtual surface, they offered insights for designing the logic behind the two solutions.

Contribution

Its important to explain the logic behind the two interaction-design solutions

Both interaction solutions are context aware placement. The screen will automatically readjust its position based on interactive screen and surrounding environment’s colliders. If it recognizes that the colliders are overlapping, it will move based on the size of the interactive screen.

Solution A - Bounce

Bounce Transform

initiated once collider is triggered

translate the virtual object until two objects are no longer colliding

Match Rotation

Screen is perpendicular to the gaze vector

Apply the x rotation of the reference object (Center Eye Anchor) to responsive object (virtual screen)

Solution B - Hover

Interactive Surface Translation

initiated once collider is triggered

translate the “Surface”(with interaction) forward from the virtual screen (with information) in parallel until no collision with the physical object

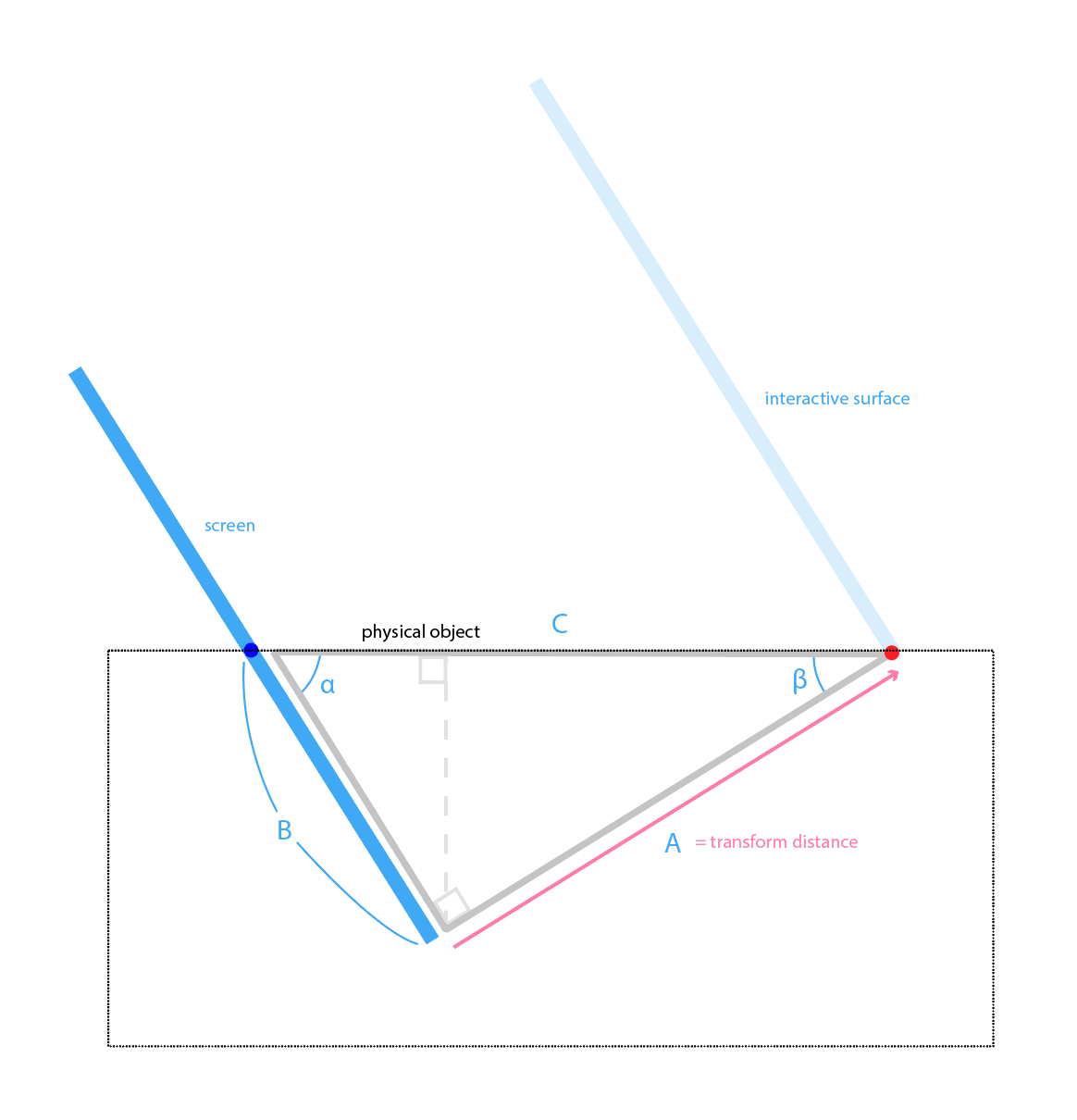

If the screen is placed at an angle…

calculate for the side A using angle α and the side B

If the screen is vertical…

translate horizontally until no collision

Pilot Testing

Conducted a pilot testing for an experiment comparing the two interaction with five participants.

Quantitative Findings

Following data was gathered during testing and saved as CSV files in Unity project folder:

task completion time

Eye Gaze Left/Right

Oculus Hand Left/Right

Center Anchor

Index Finger Tip Marker Left/Right

Results

Task Completion Time

Generally Solution A task took longer for participants

Most participants assumed they were faster in Solution B task except for one case (P3)

Hand travel distance (reach)

X is consistent between the two graphs because the given task was same

Y and Z determines the reach for the participantgraph shows Solution A to requires more movement and further reach

Important Takeaway

Pilot testing only shows tendency and I would need to conduct a real empirical experiment to conclude any statistical significance.

Qualitative Findings

Following questions were asked after testing:

Which method do you think you finished the task faster?

How was the reach for both A and B methods?

On a scale of 1-5 how much control did you have over the selections? (1- poor, 5- full)

Which method do you prefer?

Then participants answered the following questions on 5-point Likert scale for both Solutions.

I think this system would be helpful in given circumstances (Strongly disagree - Strongly agree)

I found the system unnecessarily complex (Strongly disagree - Strongly agree)

Ease of object selection (Very Dissatisfied - Very Satisfied)

RESULTS

Error Cases

Solution A Task

Participants did not fully select the button and stopped at hovering (P1, P3, P4)

Subjective Feedback

Solution A Task

“needs more affordance” (P2)

“not sure how far to press” (P1)

“harder to reach but more straightforward” (P3)

Solution B Task

One participant tried to select cards behind the interactive surface and to the cards directly (P1)

Solution B Task

“the boundary or the extent of movement required was visually shown” (P4)

Key Takeaways + Moving Forward

Limitation

The pilot testing environment is set in VR only and AR testing is possible using spatial anchors. However, to access the Quest space model, you need to build and run a Unity app on the headset. Unfortunately, in that case the Quest Link between the device and the computer is broken, which does not allow for the real-time collection of data on Unity. I am no longer able to access hand and gaze data (position and rotation) in CSV format.

Next

With the gathered eye-tracking data, I'm interested to see how participants' gaze dynamics differ when information and interactables are together on the same surface versus when they are separated.

In Solution B, the cards are displayed on the back plane (blue), while the interactive surface is located on the front plane (pink). I'm interested in analyzing how participants distribute their attention between these two planes by examining the collected eye gaze data.